|

latest version v1.9 - last update 10 Apr 2010 |

|

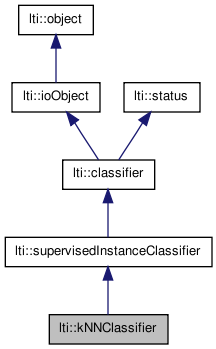

Implements a k nearest neighbors search based classifier. More...

#include <ltiKNNClassifier.h>

Classes | |

| class | parameters |

| the parameters for the class kNNClassifier More... | |

| struct | pointInfo |

| Information about a feature point. More... | |

Public Member Functions | |

| kNNClassifier () | |

| kNNClassifier (const kNNClassifier &other) | |

| virtual | ~kNNClassifier () |

| virtual const char * | getTypeName () const |

| kNNClassifier & | copy (const kNNClassifier &other) |

| kNNClassifier & | operator= (const kNNClassifier &other) |

| virtual classifier * | clone () const |

| const kNNClassifier::parameters & | getParameters () const |

| virtual bool | train (const dmatrix &input, const ivector &ids) |

| virtual bool | train (const dmatrix &input, const ivector &ids, const ivector &pointIds) |

| virtual bool | trainObject (const dmatrix &input, int &id) |

| virtual bool | trainObject (const dmatrix &input, int &id, const ivector &pointIds) |

| virtual bool | trainObjectId (const dmatrix &input, const int id) |

| virtual bool | trainObjectId (const dmatrix &input, const int id, const ivector &pointIds) |

| virtual bool | classify (const dvector &feature, outputVector &result) const |

| virtual bool | classify (const dmatrix &features, outputVector &result) const |

| virtual bool | classify (const dmatrix &features, dmatrix &result) const |

| int | getColumnId (const int columnId) const |

| virtual bool | classify (const dvector &feature, outputVector &result, std::vector< pointInfo > &points) const |

| virtual bool | nearest (const dvector &feature, pointInfo &nearestPoint) const |

| virtual bool | write (ioHandler &handler, const bool complete=true) const |

| virtual bool | read (ioHandler &handler, const bool complete=true) |

| void | build () |

| void | clear () |

Protected Types | |

| typedef std::map< int, int > | idMap_type |

| typedef kdTree< dvector, std::pair< int, int > > | treeType |

Protected Member Functions | |

| void | buildIdMaps (const ivector &ids) |

| void | defineOutputTemplate () |

| bool | classify (const dvector &feature, outputVector &output, std::multimap< double, treeType::element * > &resList) const |

Static Protected Member Functions | |

Reliability weighting functions | |

| static double | linear (const double &ratio, const double &threshold) |

| static double | exponential (const double &ratio, const double &threshold) |

Protected Attributes | |

| idMap_type | idMap |

| idMap_type | rIdMap |

| int | nClasses |

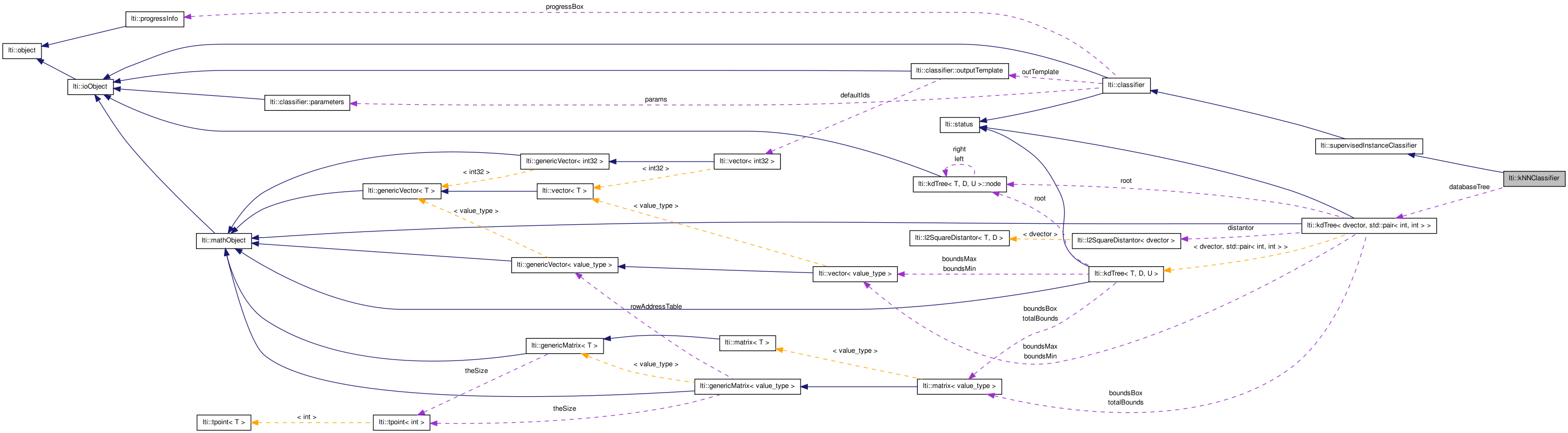

| treeType | databaseTree |

| std::vector< double > | classWeight |

| int | minPointsPerClass |

| int | maxPointsPerClass |

Implements a k nearest neighbors search based classifier.

The simplest case of a k-nearest neighbor classifier is for k=1, also known as a nearest neighbor classifier, which assings as winner class for a test point x the one belonging to the nearest sample point.

For k>1, a k nearest neighbor classifier assigns to a point x the class most represented in the k nearest neighbors. In the simplest case, each of the k nearest sample points vote with the same weight for their respective classes. In more sophisticated cases, each point will vote with a weight depending on the total number of sample points of its class and/or the ratio between the distance of the test point to the winner sample and the distance of the test point to the first sample point belonging to another class.

At this point, only the use of Euclidean distance is allowed.

This classifier uses a kd-Tree to perform the search in an efficient manner, but shows therefore also the drawbacks of a normal kd-Tree: it is not suitable for higher dimensional spaces. If you use high-dimensional spaces, maybe you should try increasing the bucket size, or activating the best-bin-first mode, which is a suggestion of David Lowe to get an aproximative solution (accurate enough) in much less time.

This classificator differs a little bit from the other LTI-Lib classificators. Since the whole training set is stored as sample points, it is useful in many applications to obtain, besides the winner class, the exact winner samples. Therefore, this class administrates two sets of ID numbers. One set for the classe IDs, which is used the same way than all other LTI-Lib classifiers, and a second set that administrates IDs for each sample point. This second set can be explicitelly given, or generated automatically. You can then for example use some tables containing additional information for each winner point, that are accessed using the point ID.

Example:

#include <iostream> #include <fstream> #include "ltiKNNClassifier.h" #include "ltiLispStreamHandler.h" // ... double inData[] = {-1,-1, -1, 0, -1,+1, +0,+1, +1,+1, +1,+0, +1,-1, +0,-1, +0,+0}; lti::dmatrix inputs(9,2,inData); // training vectors int idsData[] = {1,0,2,1,0,2,1,0,1}; // and the respective ids lti::ivector ids(9,idsData); lti::kNNClassifier knn; // our nearest neighbor classifier lti::kNNClassifier::parameters param; param.useReliabilityMeasure = false; knn.setParameters(param); // we want to see some info while training streamProgressInfo prog(std::cout); knn.setProgressObject(prog); // train the network knn.train(inputs,ids); // let us save our network for future use // in the file called mlp.dat std::ofstream out("knn.dat"); lti::lispStreamHandler lsh(out); // save the network knn.write(lsh); // close the file out.close(); // show some results with the same training set: lti::kNNClassifier::outputVector outv; // here we will get some // classification results std::cout << std::endl << "Results: " << std::endl; int id; dvector sample(2,0.0); // generate some points and check which would be the winner class for (sample.at(0) = -1; sample.at(0) <= 1; sample.at(0)+=0.25) { for (sample.at(1) = -1; sample.at(1) <= 1; sample.at(1)+=0.25) { knn.classify(sample,outv); std::cout << "Input " << sample << " \tOutput: "; outv.getId(outv.getWinnerUnit(),id); std::cout << id; std::cout << std::endl; } }

typedef std::map<int,int> lti::kNNClassifier::idMap_type [protected] |

Type for maps mapping ids from internal to external and viceversa.

typedef kdTree< dvector, std::pair<int,int> > lti::kNNClassifier::treeType [protected] |

| lti::kNNClassifier::kNNClassifier | ( | ) |

default constructor

| lti::kNNClassifier::kNNClassifier | ( | const kNNClassifier & | other | ) |

copy constructor

| other | the object to be copied |

| virtual lti::kNNClassifier::~kNNClassifier | ( | ) | [virtual] |

destructor

| void lti::kNNClassifier::build | ( | ) |

Finish a training process.

If you used the methods trainObject() or trainObjectId() you must call this method in order to complete the training process.

If you used one of the train() methods, you must avoid calling this method.

Remember that the "incremental" training mode with trainObject() or trainObjectId() cannot be combined with a "at-once" training step using the method train().

| void lti::kNNClassifier::buildIdMaps | ( | const ivector & | ids | ) | [protected] |

mapping of the ids

| bool lti::kNNClassifier::classify | ( | const dvector & | feature, | |

| outputVector & | output, | |||

| std::multimap< double, treeType::element * > & | resList | |||

| ) | const [protected] |

Helper for classification.

| virtual bool lti::kNNClassifier::classify | ( | const dvector & | feature, | |

| outputVector & | result, | |||

| std::vector< pointInfo > & | points | |||

| ) | const [virtual] |

Classification.

Classifies the feature and returns the outputVector object with the classification result.

NOTE: This method is NOT really const. Although the main members of the kNNClassifier are not changed some state variables used for efficiency are. Thus, it is not save to use the same instance of the kNNClassifier in two different threads.

| feature | pattern to be classified | |

| result | of the classifications as a classifier::outputVector | |

| points | vector sorted in increasing order of the distances to the feature point and containing two ID numbers. The first one corresponds to the class id, the second one to the point id. Also a const pointer to the feature point of the train set and the distance to that point are contained in the pointInfo structure |

| virtual bool lti::kNNClassifier::classify | ( | const dmatrix & | features, | |

| dmatrix & | result | |||

| ) | const [virtual] |

Classification.

Classifies all features (the rows of the matrix) and returns for each of them a vector of unnormalized probabilities, coded in the rows of the result matrix.

Since no classifier::outputVector is constructed, only the classification "raw data" is produced.

This method is used in recognition tasks based on many local hints, for which the individual classification of each feature vector would cost too much time.

Each column of the output matrix represent one object. To get the id represented by a vector column you can use the outputTemplate of the classifier:

kNNClassifier knn; knn.train(data); int columnId = knn.getOutputTemplate().getIds().at(columnNumber);

or the shortcut method of this class getColumnId()

NOTE: This method is NOT really const. Although the main members of the kNNClassifier are not changed some, state variables used for efficiency are. Thus, it is not save to use the same instance of the kNNClassifier in two different threads.

| features | patterns to be classified each row is one feature | |

| result | of the classifications as a classifier::outputVector |

| virtual bool lti::kNNClassifier::classify | ( | const dmatrix & | features, | |

| outputVector & | result | |||

| ) | const [virtual] |

Classification.

Classifies all features (the rows of the matrix) and returns the outputVector object with the classification result.

The classification will be the accumulation of the voting for all given points, assuming that they all belong to the same class.

NOTE: This method is NOT really const. Although the main members of the kNNClassifier are not changed some state variables used for efficiency are. Thus, it is not save to use the same instance of the kNNClassifier in two different threads.

| features | patterns to be classified each row is one feature | |

| result | of the classifications as a classifier::outputVector |

| virtual bool lti::kNNClassifier::classify | ( | const dvector & | feature, | |

| outputVector & | result | |||

| ) | const [virtual] |

Classification.

Classifies the feature and returns the outputVector object with the classification result.

NOTE: This method is NOT really const. Although the main members of the kNNClassifier are not changed some state variables used for efficiency are. Thus, it is not save to use the same instance of the kNNClassifier in two different threads.

| feature | pattern to be classified | |

| result | of the classifications as a classifier::outputVector |

Implements lti::supervisedInstanceClassifier.

| void lti::kNNClassifier::clear | ( | ) |

Resets all values and deletes the content.

If you want to forget the sample points and start giving new points with trainObject, you need to call this method first

| virtual classifier* lti::kNNClassifier::clone | ( | ) | const [virtual] |

returns a pointer to a clone of this clustering.

Implements lti::classifier.

| kNNClassifier& lti::kNNClassifier::copy | ( | const kNNClassifier & | other | ) |

copy data of "other" clustering.

| other | the clustering to be copied |

Reimplemented from lti::supervisedInstanceClassifier.

| void lti::kNNClassifier::defineOutputTemplate | ( | ) | [protected] |

Define the output template.

| int lti::kNNClassifier::getColumnId | ( | const int | columnId | ) | const [inline] |

Shortcut method to comfortably access the object id for the column of the result matrix of the classify(const dmatrix&,dmatrix&) method.

It returns a negative value if the input column index is invalid.

References lti::genericVector< T >::at(), lti::classifier::outputTemplate::getIds(), lti::classifier::getOutputTemplate(), and nClasses.

| const kNNClassifier::parameters& lti::kNNClassifier::getParameters | ( | ) | const |

returns used parameters

Reimplemented from lti::supervisedInstanceClassifier.

| virtual const char* lti::kNNClassifier::getTypeName | ( | ) | const [virtual] |

returns the name of this type ("kNNClassifier")

Reimplemented from lti::supervisedInstanceClassifier.

| virtual bool lti::kNNClassifier::nearest | ( | const dvector & | feature, | |

| pointInfo & | nearestPoint | |||

| ) | const [virtual] |

Get only the nearest point to the given vector.

Sometimes it is not necessary to have the probability distribution for the objects computed with the classify() methods. Only the nearest point can be of interest. This method provides an efficient way to just search for the nearest point and obtain its data.

| feature | reference multidimensional point | |

| nearestPoint | nearest point in the training set to the presented point. |

| kNNClassifier& lti::kNNClassifier::operator= | ( | const kNNClassifier & | other | ) |

alias for copy member

| other | the clustering to be copied |

Reimplemented from lti::supervisedInstanceClassifier.

| virtual bool lti::kNNClassifier::read | ( | ioHandler & | handler, | |

| const bool | complete = true | |||

| ) | [virtual] |

read the classifier from the given ioHandler

| handler | the ioHandler to be used | |

| complete | if true (the default) the enclosing begin/end will be also written, otherwise only the data block will be written. |

Reimplemented from lti::classifier.

| virtual bool lti::kNNClassifier::train | ( | const dmatrix & | input, | |

| const ivector & | ids, | |||

| const ivector & | pointIds | |||

| ) | [virtual] |

Supervised training.

The vectors in the input matrix are arranged row-wise, i.e. each row contains one data vector. The ids vector contains the class label for each row.

This is an alternative method to trainObject(). You cannot add further objects after you have called train(), nor can you call train() after calling trainObject(), since all data provided with trainObject() would be removed. In other words, you must decide if you want to supply all objects separately or if you want to give them all simultaneously, but you cannot combine both methods.

As ids for each feature point the index of the corresponding values will be used.

| input | the matrix with the input vectors (each row is a training vector) | |

| ids | vector of class ids for each input point | |

| pointIds | vector containing the ids for each single feature point of the training set. |

Supervised training.

The vectors in the input matrix are arranged row-wise, i.e. each row contains one data vector. The ids vector contains the class label for each row.

This is an alternative method to trainObject(). You cannot add further objects after you have called train(), nor can you call train() after calling trainObject(), since all data provided with trainObject() would be removed. In other words, you must decide if you want to supply all objects separately or if you want to give them all simultaneously, but you cannot combine both methods.

As ids for each feature point the index of the corresponding matrix row will be used.

| input | the matrix with the input vectors (each row is a training vector) | |

| ids | vector of class ids for each input point |

Implements lti::supervisedInstanceClassifier.

| virtual bool lti::kNNClassifier::trainObject | ( | const dmatrix & | input, | |

| int & | id, | |||

| const ivector & | pointIds | |||

| ) | [virtual] |

Adds an object to this classifier.

The id is given automatically and returned in the parameter.

After you have trained several objects, you must call the build() method to finish the training process. If you don't do it, the classifier will ignore everything you have provided.

This is an alternative method to train(). You cannot add further objects after you have called train, nor can you call train() after calling this method, since all data provided with trainObject would be removed. In other words, you must decide if you want to supply all objects separately or if you want to give them all simultaneously, but you cannot combine both methods.

Note that the difference with the method trainObjectId() is that here you will receive as a returned argument the id assigned to the object, while in the method trainObjectId() you decide which id should be used for the given object.

| input | each row of this matrix represents a point in the feature space belonging to one single class. | |

| id | this id will be used for the class represented by the points in the input matrix. | |

| pointIds | each point in the input matrix will have its own id given by the entries of this vector, which must have a size equal to the number of rows of input. |

| virtual bool lti::kNNClassifier::trainObject | ( | const dmatrix & | input, | |

| int & | id | |||

| ) | [virtual] |

Adds an object to this classifier.

The id is given automatically and returned in the parameter.

After you have trained several objects, you must call the build() method to finish the training process. If you don't do it, the classifier will ignore everything you have provided.

This is an alternative method to train(). You cannot add further objects after you have called train, nor can you call train() after calling this method, since all data provided with trainObject would be removed. In other words, you must decide if you want to supply all objects separately or if you want to give them all simultaneously, but you cannot combine both methods.

Note that the difference with the method trainObjectId() is that here you receive as as returned argument the id assigned to the object, while in the method trainObjectId() you decide which id should be used for the given object.

As id for each point in the given matrix will be used the row index plus the number of points trained until now, i.e. just the successive numeration of each sample point will be continued.

| input | each row of this matrix represents a point in the feature space belonging to one single class. | |

| id | this id will be used for the class represented by the points in the input matrix. |

| virtual bool lti::kNNClassifier::trainObjectId | ( | const dmatrix & | input, | |

| const int | id, | |||

| const ivector & | pointIds | |||

| ) | [virtual] |

Adds an object to this classifier.

The object ID is given by the user.

After you have trained several objects, you must call the build() method to finish the training process. If you don't do it, the classifier will ignore everything you have provided.

This is an alternative method to train(). You cannot add further objects after you have called train, nor can you call train() after calling this method, since all data provided with trainObject would be removed. In other words, you must decide if you want to supply all objects separately or if you want to give them all simultaneously, but you cannot combine both methods.

Note that the difference with the method trainObject() is that here you directly provide the id to be used for the object, while the trainObject() method returns one id that is computed automatically.

| input | each row of this matrix represents a point in the feature space belonging to one single class. | |

| id | this id will be used for the class represented by the points in the input matrix. | |

| pointIds | each point in the input matrix will have its own ID, given by the entries in this vector, which must have the same size than the number of rows of input. |

| virtual bool lti::kNNClassifier::trainObjectId | ( | const dmatrix & | input, | |

| const int | id | |||

| ) | [virtual] |

Adds an object to this classifier.

The object ID is given by the user.

After you have trained several objects, you must call the build() method to finish the training process. If you don't do it, the classifier will ignore everything you have provided.

This is an alternative method to train(). You cannot add further objects after you have called train, nor can you call train() after calling this method, since all data provided with trainObject would be removed. In other words, you must decide if you want to supply all objects separately or if you want to give them all simultaneously, but you cannot combine both methods.

Note that the difference with the method trainObject() is that here you directly provide the id to be used for the object, while the trainObject() method returns one id that is computed automatically.

As id for each point in the given matrix will be used the row index plus the number of points trained until now, i.e. just the successive numeration of each sample point will be continued.

| input | each row of this matrix represents a point in the feature space belonging to one single class. | |

| id | this id will be used for the class represented by the points in the input matrix. |

| virtual bool lti::kNNClassifier::write | ( | ioHandler & | handler, | |

| const bool | complete = true | |||

| ) | const [virtual] |

write the classifier in the given ioHandler

| handler | the ioHandler to be used | |

| complete | if true (the default) the enclosing begin/end will be also written, otherwise only the data block will be written. |

Reimplemented from lti::classifier.

std::vector<double> lti::kNNClassifier::classWeight [protected] |

Optionally, a scalar weight for each can be applied, as a-priori value.

The std::vector is used due to the push_back interface.

It is accessed with the internal id.

treeType lti::kNNClassifier::databaseTree [protected] |

The database with accelerated nearest neighbor search.

The kdTree uses as n-dimensional points dvectors and as data requires a std::pair containing the class id and the point id.

idMap_type lti::kNNClassifier::idMap [protected] |

Map from external id to internal id, used for training.

int lti::kNNClassifier::maxPointsPerClass [protected] |

Maximum number of points per class.

This attribute is valid only after the complete training process

int lti::kNNClassifier::minPointsPerClass [protected] |

Minimum number of points per class.

This attribute is valid only after the complete training process

int lti::kNNClassifier::nClasses [protected] |

Number of classes currently in the classifier.

Referenced by getColumnId().

idMap_type lti::kNNClassifier::rIdMap [protected] |

Map from internal to external id, used for training.