|

latest version v1.9 - last update 10 Apr 2010 |

|

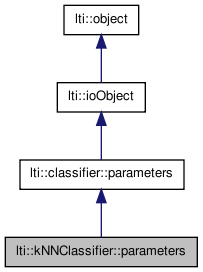

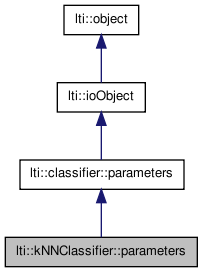

the parameters for the class kNNClassifier More...

#include <ltiKNNClassifier.h>

Public Member Functions | |

| parameters () | |

| parameters (const parameters &other) | |

| virtual | ~parameters () |

| const char * | getTypeName () const |

| parameters & | copy (const parameters &other) |

| parameters & | operator= (const parameters &other) |

| virtual classifier::parameters * | clone () const |

| virtual bool | write (ioHandler &handler, const bool complete=true) const |

| virtual bool | read (ioHandler &handler, const bool complete=true) |

Public Attributes | |

| int | kNN |

| bool | normalizeData |

| bool | normalizeOutput |

Nearest neighbor search options | |

The search is optimized using a kd-Tree. The parameters in this group control how the tree is organized or how to search for the data therein. | |

| bool | bestBinFirst |

| int | eMax |

| int | bucketSize |

Reliability | |

|

| |

| enum | eReliabilityMode { Linear, Exponential } |

| bool | useReliabilityMeasure |

| eReliabilityMode | reliabilityMode |

| double | reliabilityThreshold |

| int | maxUnreliableNeighborhood |

the parameters for the class kNNClassifier

If useReliabilityMeasure is true, then the weight of a point can be determined using the ratio of two distances, but there are many possiblities to consider this ratio.

Several simple modes are provided here. Let d1 be the distance to the winner sample point and d2 the distance to the closest point belonging to a different class than the winner.

| lti::kNNClassifier::parameters::parameters | ( | ) |

default constructor

Reimplemented from lti::classifier::parameters.

| lti::kNNClassifier::parameters::parameters | ( | const parameters & | other | ) |

copy constructor

| other | the parameters object to be copied |

| virtual lti::kNNClassifier::parameters::~parameters | ( | ) | [virtual] |

destructor

Reimplemented from lti::classifier::parameters.

| virtual classifier::parameters* lti::kNNClassifier::parameters::clone | ( | ) | const [virtual] |

returns a pointer to a clone of the parameters

Reimplemented from lti::classifier::parameters.

| parameters& lti::kNNClassifier::parameters::copy | ( | const parameters & | other | ) |

copy the contents of a parameters object

| other | the parameters object to be copied |

| const char* lti::kNNClassifier::parameters::getTypeName | ( | ) | const [virtual] |

returns name of this type.

Reimplemented from lti::classifier::parameters.

| parameters& lti::kNNClassifier::parameters::operator= | ( | const parameters & | other | ) |

copy the contents of a parameters object

| other | the parameters object to be copied |

| virtual bool lti::kNNClassifier::parameters::read | ( | ioHandler & | handler, | |

| const bool | complete = true | |||

| ) | [virtual] |

read the parameters from the given ioHandler

| handler | the ioHandler to be used | |

| complete | if true (the default) the enclosing begin/end will be also written, otherwise only the data block will be written. |

Reimplemented from lti::classifier::parameters.

| virtual bool lti::kNNClassifier::parameters::write | ( | ioHandler & | handler, | |

| const bool | complete = true | |||

| ) | const [virtual] |

write the parameters in the given ioHandler

| handler | the ioHandler to be used | |

| complete | if true (the default) the enclosing begin/end will be also written, otherwise only the data block will be written. |

Reimplemented from lti::classifier::parameters.

Best Bin First.

If this parameter is set to true, the Best Bin First (BBF) algorithm of Lowe et. al will be applied. It is an approximative algorithm appropriate for spaces of relative high dimensionality (100 or so) in which some improbable bins are discarded in the search.

Note that if you activate this, the result is possible optimal, but not optimal.

Default value: false

Bucket size.

Each node of the kd-Tree can contain several points. The search within a node is made with linear search, i.e. the brute force method computing the distances to each single point. This parameter indicates the number of points that will be stored in the node. The name "bucket" comes from the original kd-Tree paper.

Default value: 5

Maximum visit number per leaf node allowed.

This is only required if you specify a best-bin-first (BBF) search. It is the maximal number of visits allowed for leaf nodes. (in the original paper is called Emax).

Usually this value depends on many factors. You can set it as a percentage of the expected number of leaf nodes (approximately number of points/bucketSize).

Default value: 100, but you should set it in order for BBF to work appropriatelly.

How many nearest neighbors should be determined per classification.

Default value: 1 (i.e. nearest neighbor classifier)

Maximal number of neighbors considered while detecting the second point belonging to another class than the winner.

If no point was found within this number of points, a "perfectly" reliable point will be assumed.

Default value: 20

Normalize data to equal number of data samples.

The traditional k-NN classificator assumes that the a-priori probability of a class is given as the number of patterns belonging to the class divided by the total number of patterns. In many recognition classifications, however, the classes are all equiprobable. If normalizeData is set to true this second alternative will be chosen. In other words, if set to true, the samples will be weighted relative to the number of samples per class. If set to false, each sample has the weight 1.

Default value is true

Normalize the output vector.

The k-nearest neighbor algorithm counts how many elements per class are present in the nearest k points to the test point. This voting can be altered by the normalizeData parameter to count not one per class, but 1/nc, where nc is the number of elements of the corresponding class in the training set.

The output can be returned "as is" setting this parameter to false, or can be normalized to become a probability value setting this parameter to true.

Default value: true

Reliability mode used.

For possible values see eReliabilityMode.

Default value: Linear

Threshold value used for the reliability function.

Default value: 10, i.e. distances ratios greater than d2/d1 should be consider with the same weight.

Use the reliability measure suggested by Lowe.

Lowe suggested in his paper: "Distinctive Image Features from Scale Invariant Keypoints", June, 2003 the use of a reliability measure for classification. It is defined as the ratio between the distance from the analyzed point p to the closest sample point w, and the distance from the same point p to the closest point that belongs to a class different to the one of the point w.

You usually use this mode with kNN=1. The normalization of the output should be deactivated.

Default value: false