|

latest version v1.9 - last update 10 Apr 2010 |

|

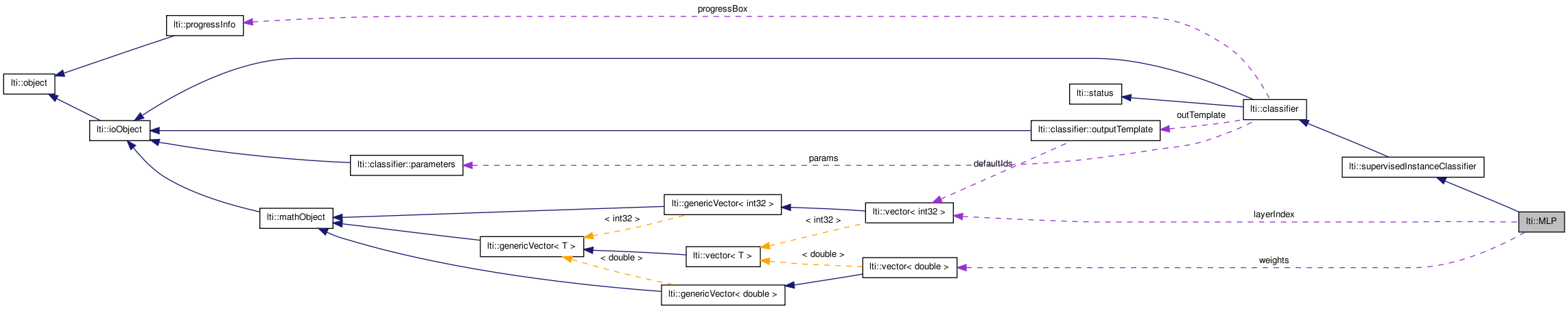

Multi-layer perceptrons. More...

#include <ltiMLP.h>

Classes | |

| class | activationFunctor |

| Parent class for all activation function functors. More... | |

| class | linearActFunctor |

| a linear activation function More... | |

| class | parameters |

| the parameters for the class MLP More... | |

| class | sigmoidFunctor |

| a sigmoid activation function More... | |

| class | signFunctor |

| a sign activation function (1.0 if input 0 or positive, -1.0 otherwise) More... | |

Public Member Functions | |

| MLP () | |

| MLP (const MLP &other) | |

| virtual | ~MLP () |

| virtual const char * | getTypeName () const |

| MLP & | copy (const MLP &other) |

| MLP & | operator= (const MLP &other) |

| virtual classifier * | clone () const |

| const parameters & | getParameters () const |

| virtual bool | train (const dmatrix &input, const ivector &ids) |

| virtual bool | train (const dvector &weights, const dmatrix &input, const ivector &ids) |

| virtual bool | classify (const dvector &feature, outputVector &result) const |

| virtual bool | write (ioHandler &handler, const bool complete=true) const |

| virtual bool | read (ioHandler &handler, const bool complete=true) |

| const dvector & | getWeights () const |

Multi-layer perceptrons.

This class implements multi-layer neural networks using different training methods.

A number of layers between 1 and 3 is allowed.

Training methods implemented at this time are:

Following example shows how to use this sort of classifier:

double inData[] = {-1,-1, -1, 0, -1,+1, +0,+1, +1,+1, +1,+0, +1,-1, +0,-1, +0,+0}; lti::dmatrix inputs(9,2,inData); // training vectors int idsData[] = {1,0,1,0,1,0,1,0,1}; // and the respective ids lti::ivector ids(9,idsData); lti::MLP ann; // our artificial neural network lti::MLP::parameters param; lti::MLP::sigmoidFunctor sigmoid(1); param.setLayers(4,sigmoid); // two layers and four hidden units. param.trainingMode = lti::MLP::parameters::ConjugateGradients; param.maxNumberOfEpochs=200; ann.setParameters(param); // we want to see some info while training streamProgressInfo prog(std::cout); ann.setProgressObject(prog); // train the network ann.train(inputs,ids); // let us save our network for future use // in the file called mlp.dat std::ofstream out("mlp.dat"); lti::lispStreamHandler lsh(out); // save the network ann.write(lsh); // close the file out.close(); // show some results with the same training set: lti::MLP::outputVector outv; // here we will get some // classification results cout << endl << "Results: " << endl; int i,id; for (i=0;i<inputs.rows();++i) { ann.classify(inputs.getRow(i),outv); cout << "Input " << inputs.getRow(i) << " \tOutput: "; outv.getId(outv.getWinnerUnit(),id); cout << id; if (id != ids.at(i)) { cout << " <- should be " << ids.at(i); } cout << endl; }

Better display for the classification of 2D problems can be generated using the functor lti::classifier2DVisualizer.

| lti::MLP::MLP | ( | ) |

default constructor

| virtual lti::MLP::~MLP | ( | ) | [virtual] |

destructor

| virtual bool lti::MLP::classify | ( | const dvector & | feature, | |

| outputVector & | result | |||

| ) | const [virtual] |

Classification.

Classifies the feature and returns the outputVector with the classification result.

| feature | the vector to be classified | |

| result | the result of the classification |

Implements lti::supervisedInstanceClassifier.

| virtual classifier* lti::MLP::clone | ( | ) | const [virtual] |

returns a pointer to a clone of this classifier.

Implements lti::classifier.

copy data of "other" classifier.

| other | the classifier to be copied |

Reimplemented from lti::supervisedInstanceClassifier.

| const parameters& lti::MLP::getParameters | ( | ) | const |

returns used parameters

Reimplemented from lti::supervisedInstanceClassifier.

| virtual const char* lti::MLP::getTypeName | ( | ) | const [virtual] |

returns the name of this type ("MLP")

Reimplemented from lti::supervisedInstanceClassifier.

| const dvector& lti::MLP::getWeights | ( | ) | const |

Return a reference to the internal weights vector.

Used mainly for debugging purposes.

alias for copy member

| other | the classifier to be copied |

Reimplemented from lti::supervisedInstanceClassifier.

| virtual bool lti::MLP::read | ( | ioHandler & | handler, | |

| const bool | complete = true | |||

| ) | [virtual] |

read the rbf classifier from the given ioHandler

| handler | the ioHandler to be used | |

| complete | if true (the default) the enclosing begin/end will be also written, otherwise only the data block will be written. |

Reimplemented from lti::classifier.

| virtual bool lti::MLP::train | ( | const dvector & | weights, | |

| const dmatrix & | input, | |||

| const ivector & | ids | |||

| ) | [virtual] |

Supervised training.

This method, used for debugging purposes mainly, initialize the weights with the values given.

The vectors in the input matrix must be trained using as "known" classes the values given in ids.

| weights | this vector is used to initialize the weights. Must be consistent with the parameters. | |

| input | the matrix with input vectors (each row is a training vector) | |

| ids | the output classes ids for the input vectors. |

Supervised training.

The vectors in the input matrix must be trained using as "known" classes the values given in ids.

| input | the matrix with input vectors (each row is a training vector) | |

| ids | the output classes ids for the input vectors. |

Implements lti::supervisedInstanceClassifier.

| virtual bool lti::MLP::write | ( | ioHandler & | handler, | |

| const bool | complete = true | |||

| ) | const [virtual] |

write the rbf classifier in the given ioHandler

| handler | the ioHandler to be used | |

| complete | if true (the default) the enclosing begin/end will be also written, otherwise only the data block will be written. |

Reimplemented from lti::classifier.